The Energy-Intelligence Hypothesis: Mapping AI’s Energy Bottlenecks

As AI advances, it’s not just chips or algorithms that are hitting limits—it’s the energy systems that power them.

I. The End of “More for Less”

For most of the last half-century, computing progress followed a simple promise: more for less. Each new generation of hardware delivered more capability while consuming less energy per operation. Koomey’s Law captured this perfectly. Computing efficiency roughly doubled every 18 months. That trend hasn’t disappeared, but its meaning has fundamentally changed.

In the age of frontier AI, efficiency no longer reduces total energy use. It expands it. Compute has become so economically valuable that every efficiency gain is immediately reinvested into more compute. This is Jevons’ Paradox at work. As something becomes cheaper and more efficient, we use more of it. That dynamic is now obvious in the market. Hyperscalers are explicit that power is their binding constraint. Where and how fast they can deploy AI increasingly depends on where they can secure electrons.

That realization has sparked a healthy debate online. One camp frames the AI race as an energy production race: can the U.S. scale generation fast enough? That framing is broadly correct. Energy supply matters enormously. Others respond that the real issue isn’t energy, it’s power capacity. Energy production only matters if you can actually deliver it. Wires, substations, transformers, gas pipelines, interconnection rights, and permitting often take longer to build than the power plant itself. Without enough power capacity, adding generation just shifts the bottleneck downstream. This distinction is also right, but we think we need to take the frame one level further.

II. The Energy-Intelligence Hypothesis

When we talk about AI’s energy constraint, the real unit of analysis isn’t just energy or power. It’s the entire energy supply chain of AI. From fuel to turbines, from turbines to transmission, from transmission to data centers, from data centers to cooling systems, and finally into racks and the GPU itself. Every step introduces loss, delay, and constraint. And pressure at any one layer can force inefficiency at the others. This is where the “Energy-Intelligence Hypothesis” comes in. The core claim is simple: if we continue shipping AI capability at the projected rate, compute demand will outpace the physical energy system required to power it. Worse, the rush to meet near-term demand will often lock in inefficient, near-sighted infrastructure choices that persist for decades. The constraint on AI progress is no longer just chips or algorithms. It is the full, end-to-end energy supply chain that turns fuel into usable intelligence.

There may even be a deeper, testable relationship here. Just as scaling laws link model performance to compute, there may be an analogous relationship between AI capability and system-level energy consumption. That’s above my pay grade for now. But the market signal is already clear: the marginal returns to energy in the AI race are diminishing because the system itself is hitting physical limits.

The scale of the challenge comes from a structural mismatch. Compute demand scales exponentially, driven by frontier model scaling. Energy infrastructure scales linearly, constrained by permitting, manufacturing, and construction cycles measured in years or decades. That mismatch is amplified by history. U.S. electricity demand was largely flat for over twenty years, leaving the sector without the institutional muscle or supply chains needed for rapid expansion. Now we’re staring at a world where data center energy demand may grow from roughly 17 GW today to over 130 GW by 2030.

III. From Chips to Systems

So far, AI energy optimization has focused on chips and models (with a nod to cooling). Tokens per watt became the canonical metric. It’s a useful measure, but it only captures what happens once electricity has already arrived at the GPU. It ignores everything upstream that made that electron usable in the first place. The modern AI stack is no longer just software running on silicon. It is a physical infrastructure system.

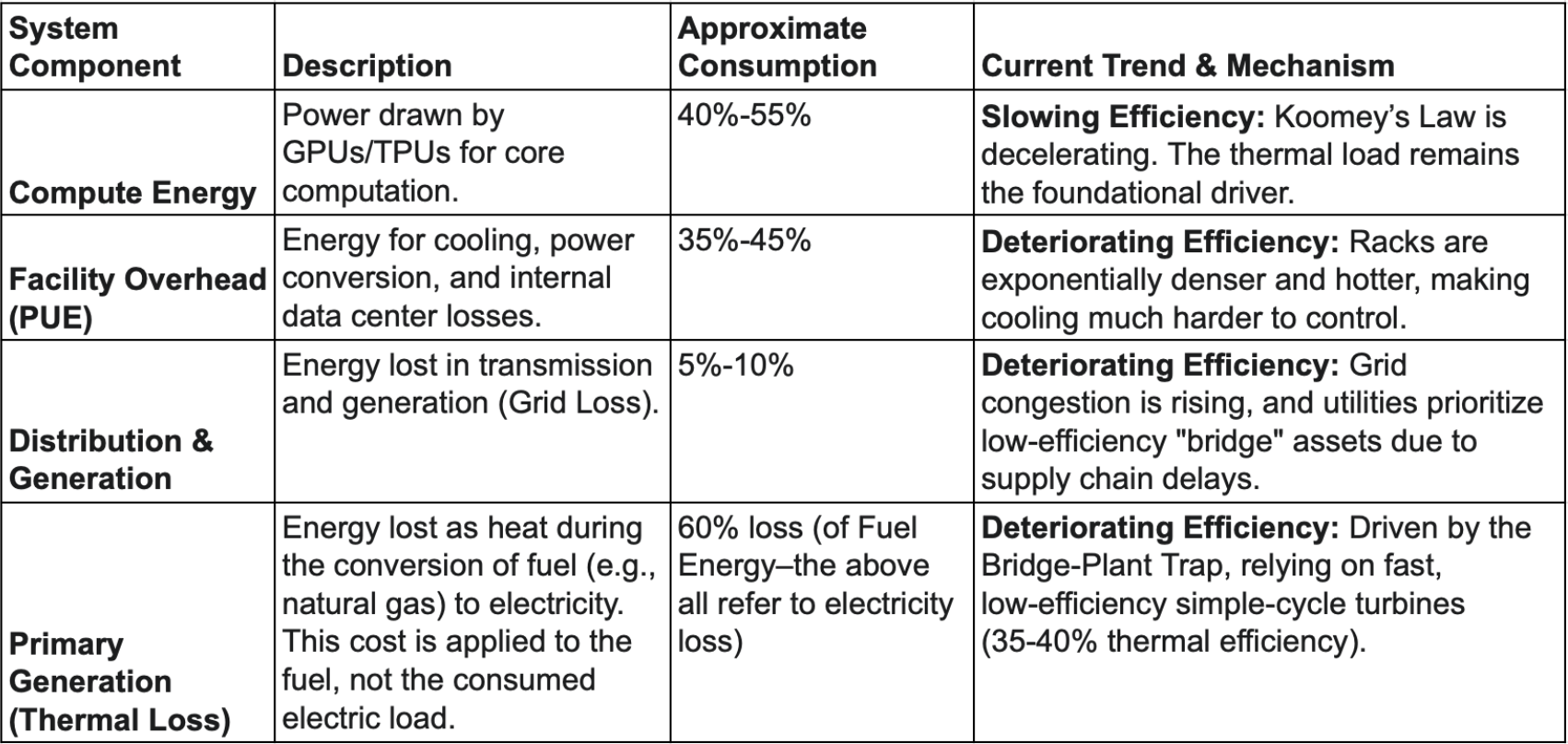

We break total system energy into four measurable components: 1) compute-level, 2) data-center-level, 3) grid-level, and 4) power-plant-level energy. The point of doing so isn’t academic bookkeeping. It’s to identify where losses compound and where constraints harden over time. At the compute layer, GPUs and TPUs draw enormous power and define the thermal load. At the facility layer, a large share of energy is consumed by cooling, power conversion, and internal losses, captured imperfectly by PUE. At the grid layer, electricity is lost in transmission and constrained by congestion and aging equipment. And at the generation layer, massive amounts of energy are lost as heat when fuel is converted into electricity—an upstream cost that never shows up in “data center load” metrics but absolutely matters for the real resource footprint of AI.

The lesson is straightforward: marginal efficiency gains at the chip level are no longer sufficient to overwhelm compounding losses across the rest of the energy supply chain. Chips and algorithms are necessary, but they are no longer the dominant constraint.

IV. The System-Level Rebound & Slowdown

The energy economy of AI has entered a system-level rebound. Improvements at the compute layer drive greater total system stress, and that stress forces choices that reduce efficiency elsewhere. Interconnection queues have exploded. Hyperscalers now prioritize speed-to-power over cost or sustainability. Every short-term fix, whether it be emergency turbines, incremental transformers, or ad hoc cooling becomes long-lived infrastructure. Once built, inefficiency calcifies. Even as chips get more efficient, total system energy continues to scale with compute.

This is why focusing narrowly on generation or power capacity misses the deeper issue. Pressure at one layer of the system propagates to the others. The rebound ensures that constraints from cooling, distribution, and generation eventually overwhelm dwindling efficiency gains at the hardware level.

The buffer that once made compute feel lightweight is gone. Hardware efficiency is slowing as Koomey’s Law loses momentum, constrained by the end of Dennard Scaling and thermodynamic limits. Facility efficiency has plateaued as PUE improvements stall even while rack densities and heat loads explode. Transmission and distribution losses are rising as congestion worsens and utilities are forced to rely on older, lower-efficiency equipment due to transformer and switchgear backlogs. And generation efficiency is declining at the margin as providers turn to fast-to-deploy but inefficient turbines to meet urgent demand, locking in higher fuel burn for decades.

V. Startup Opportunities

Behind every token is a stack of software, hardware, and physical energy infrastructure, the latter of which is being strained to its limits by compounding token growth. We must address the physical layer head-on. We highlight four immediate strategic shifts necessary for investors and founders to manage this new reality, which we believe offer significant opportunities for new companies.

1. High-Velocity Supply Buildout

The AI demand shock requires the power sector to move from multi-decade planning to rapid, industrial-scale deployment. The opportunity is not just building more assets, but building them faster with fewer bottlenecks:

- Infrastructure bypass: modular power systems, solid-state transformers, utility-scale batteries to avoid constrained grid components and accelerate time-to-power

- Supply-chain expansion: manufacturing capacity and redesigns for turbines, transformers, generators, switchgear; upstream access to copper, lithium, rare earths

- AI-unbundled EPC and consultants: automate engineering analysis, interconnection and permitting modeling, procurement planning, and project updates to compress months of work into weeks

- Grid enablement: grid-enhancing technologies, advanced power electronics, standardized/modular construction for faster interconnection and higher utilization

2. Data Center Facility Resilience

If generation and delivery are long-cycle constraints, heat is the immediate one. Rising chip density is driving cooling and conversion losses higher. Some ways we see that being addressed are:

- Advanced thermal management: liquid immersion, direct-to-chip cooling, next-gen UPS/PDUs to stabilize or reduce PUE

- Extreme siting: data centers in heat-resilient environments (underground, underwater, etc.)

- Stranded energy recapture: heat exchangers and campus-level thermal reuse

- On-site flexibility: batteries and BTM generation for resiliency, demand response, and peak load reduction

3. Frontier Energy Supply & Bypass

Traditional generation alone is unlikely to meet long-term AI demand. The system needs firm power closer to load, outside congested grids.

- BTM / co-located generation: modular nuclear, advanced geothermal, long-duration energy storage

- Energy-agnostic sites: data center designs that can rapidly integrate non-traditional power sources to bypass interconnection delays

4. Digital Infrastructure: Planning, Visibility, and Flexibility

The energy system is still planned with manual studies and low-resolution data, which breaks as AI load scales. This opens up opportunities for:

- Grid data platforms: node-level visibility into capacity, congestion, interconnection queues, and future prices

- Predictive resilience: AI-driven load management and forecasting to anticipate stress and optimize flows

- Intelligent load + VPPs: energy-aware scheduling and aggregation of flexible AI load into grid assets

In future issues, I’ll go deeper into each of these opportunity areas. For now, the key point is this: the constraint on AI is no longer just chips or algorithms. It’s the energy supply chain that powers them. And that system is under more strain than most people realize.