In January 2023, semiconductors were not the rage. NVIDIA was worth $360B. Memory was a sleepy commodity. Hyperscaler capex totaled $130B+, relatively flat from the year prior. Approximately 100k H100-equivalent compute systems were deployed globally, with few having ever heard the term “H100” before.

We were post the ChatGPT moment, but the gears of the semiconductor supercycle were only beginning to churn.

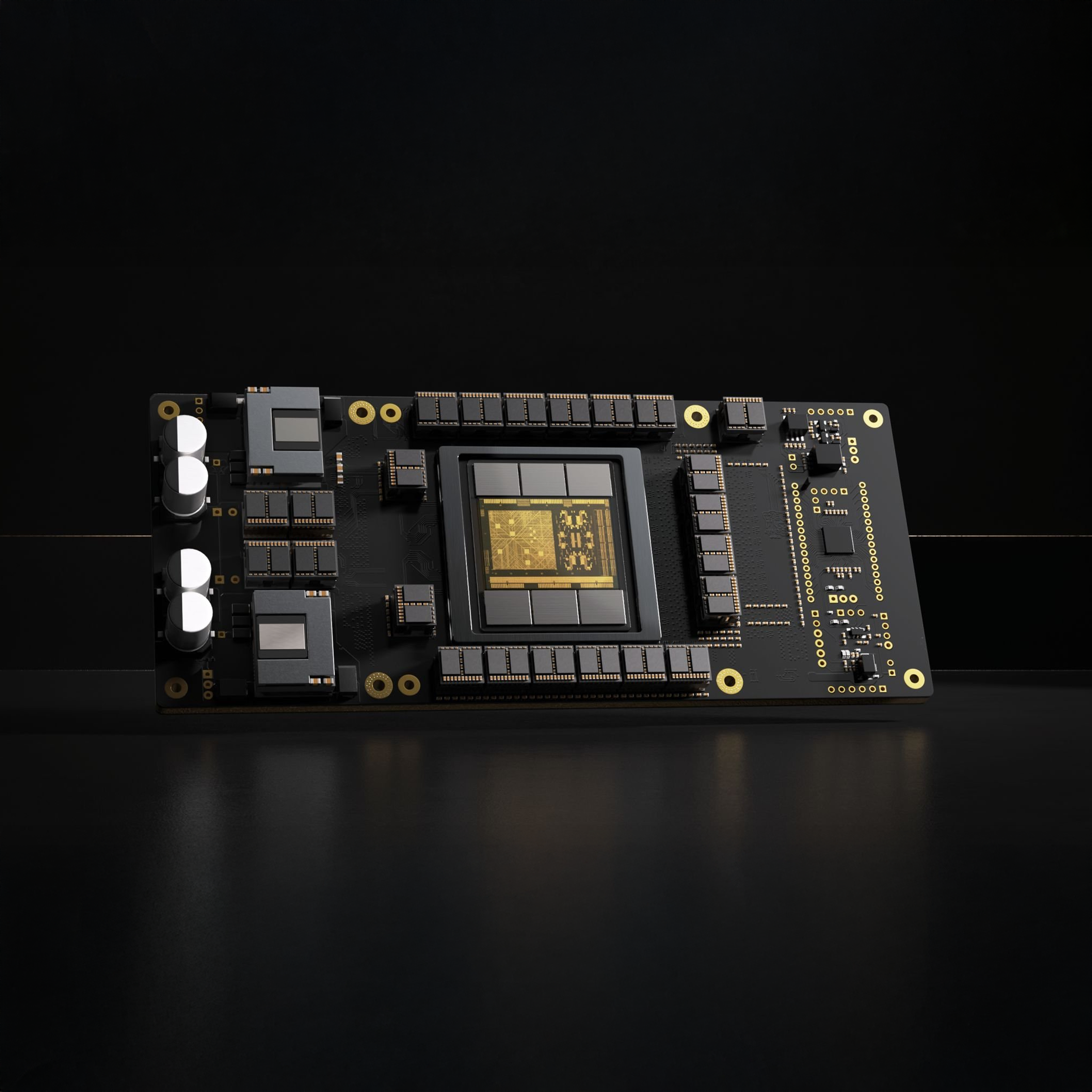

Today, NVIDIA is worth over $4.5T. Multi trillion parameter models demand staggering memory capabilities, driving sky high DRAM prices and scarce supply. Hyperscaler capex in 2025 surpassed $400B and is expected to grow to $600B in 2026. As of Q3 2025, more than 3 million H100 equivalent systems are training models and running inference. Executives now brag about their secured GPU shipments as they compete in an economy increasingly organized around AI.

Back to early 2023.

Our belief was that this was about to change. AI demand was set to explode, and the world did not yet have sufficient compute to meet the need. Scaling laws suggested that no amount of computation would ever truly be enough to deliver the magical experiences AI could unlock. A demand tsunami was coming.

We intuited that a window of opportunity was opening for entrants to capitalize on the moment. In February, I shared this view with the Wall Street Journal: “There’s new openings for attack and opportunity for those players because the types of chips that are going to most efficiently run these algorithms are different from a lot of what’s already out there.”

That same day, I met Gavin Uberti, the CEO and cofounder of Etched.

Gavin had pitch perfect understanding of what was about to happen: transformer dominance would expand; the GPU bottleneck would intensify; inference would become the all-important battleground; and the power of purpose-built hardware would become clear.

We led the Seed round in Etched in March 2023 and have since worked with Gavin and the team as they (as they like to say) build the hardware for superintelligence. This experience combined with studying the broader compute supercycle helped crystallize our conviction.

Our Compute Thesis

Our compute thesis is simple. Alpha exists at seed for compute investing. Every major technology shift requires a rebuild of its underlying infrastructure. As compute drives intelligence, demand for computation is growing exponentially, forcing a massive capex buildout across silicon, power, networking, and cooling. Fundamental bottlenecks are emerging. Incumbents are being pushed beyond incremental improvement. New opportunities are opening for founders taking first principles approaches to rearchitect how computation is produced and scaled. Yet the majority of seed investors shy away from compute deals.

We’ve noticed that fewer VCs spent time in the compute market, but wanted to quantify it. We analyzed the past decade of M&A across software infrastructure (defined as Dev Ops, Dev Tools, Data, etc) as compared to compute (semiconductor value chain). Since 2015, software infra saw 8,500 M&A transactions, while compute saw 1,300. Despite 15% the deal count, the resulting value creation in compute was $705B, compared to $587B in software infrastructure. It’s the power law on top of the power law – fewer opportunities but far bigger outcomes.

We believe our thesis will only grow in strength thanks to the token exponential. Consumer adoption is accelerating as models improve and new use cases emerge. AI is moving from occasional interaction to daily workflow. Swarms of agents are running continuously in parallel. Enterprise infrastructure is migrating from CPU-based machine learning to GPU-based LLMs. Beyond knowledge work, AI will expand into science, robotics, security, entertainment, and products we have not yet imagined.

The simplest evidence is personal. AI is not just pervasive in my life. My usage is accelerating rapidly. My wife and I just had our second baby; ChatGPT was around for the first but now Chat is like a doctor/therapist in our pocket. At Primary, Gaby and I are in deep debate with Claude around our compute thesis. The firm is restructuring our workflows around AI tools in real time. Our token usage today is easily a hundred, if not a thousand times, greater than a year ago at this time.

And underneath it all sits the same constraint: compute. So it should come as no surprise that we think Jensen is directionally correct when he suggests a $3 to $4 trillion buildout may arrive this decade alone.

The Bear Case

Of course not everyone is a Jensen bull. Michael Burry, the seer of the housing bubble, has $1.1 billion in short bets against NVIDIA and Palantir – and he's not alone. The bear case: infrastructure spend won't yield equivalent value. Anthropic and OpenAI will make over $60B in revenue, backed up by over $600B of hyperscaler capex. Google spent $24B in capex in Q3 2025, only to make $15B in their cloud business. Enterprise revenue is at risk – OpenAI, Anthropic, Google, Microsoft, and Palantir all want the entire enterprise wallet. Even early winners like ServiceNow are trading back at 2023 prices as the market sorts winners from losers.

Naysayers are pointing to signs of "bubble behavior": circular revenue deals where NVIDIA invests into OpenAI which buys NVIDIA hardware; depreciation concerns as chip cycles accelerate; unsustainable spending with OpenAI committing to nearly $1T in compute while burning $9B a year; and questionable valuations across asset classes, with sketchy pricing practices and stressed debt markets.

The behavior – irrational exuberance, circular revenue, fantastical projections – all just screams bubble. You can hear the echoes of the dot.com bubble when people speak of tokens as proof of value. Or the telecom overbuild. Or the 2008 financial crisis with the funky debt.

The lynchpin to the bull case is simple: AI needs to deliver value. We believe it will, and then some.

In Defense of the Bull Case

The historical differences that defend Jensen's bull case matter:

- 2001: Overbuilt fiber sat at 5% utilization. Today, every GPU yearns to run at capacity. Even a 1% increase in utilization can be a billion dollar revenue opportunity.

- 2008: Money flow built on unpayable consumer debt with no cash generation. Today, hyperscalers with $500B+ annual free cash flow invest in productive infrastructure. Meta, Microsoft, Google, Amazon, SpaceX are good debtors.

OpenAI went from $2B, to $6B, to $20B in revenue in the last three years. Anthropic went from $1B to $9B in 2025 and is on track for $30B this year. This is with only 10-20% global consumer usage, no ad monetization, and barely cracking the enterprise opportunity.

Circular revenue becomes problematic when no value is created. Nvidia invests in OpenAI and Coreweave, then sells them GPUs with usage guarantees. But this reflects genuine scarcity and real demand – Nvidia captures value at multiple points in an actually expanding market, not recycling dollars in a closed loop.

And perhaps most importantly, geopolitical competition removes the option to slow down. There's consensus we cannot cede AI leadership to China. That requires more compute.

An Unprecedented Opportunity for Value Creation

There maybe should be a rule in investing that you're not allowed to say, “this time things are different.” But ... the all important word in that sentence is “time.” We are undoubtedly in bubble territory. I got into tech in 2010 and, for 12 years, I heard talk of bubbles. And then the bubble burst. The value creation in that time was astronomical, and it will be de minimis compared to what is taking place now. Said another way, we believe years of demand, endless innovation, and cash-rich companies financing the build point to a fact that things are different this time, for now.

We believe the next phase of compute will require more than iteration. It will require step functions. In some cases, discontinuous jumps in architecture, efficiency, and system design that incumbents are not economically or culturally positioned to lead. In moments like this, the opportunities emerge not because incumbents are asleep, but because they are rationally bound to the status quo. In moments like this, the bottlenecks move faster than the incumbents.

The Founders Making Building the Future

Ultimately, this thesis is brought to life by founders ambitious enough to build where the stack is hardest. The ones we are most excited to back combine deep technical realism with a willingness to challenge what the system has accepted for decades.

A new generation is showing up. Semiconductor and computer architecture programs are surging, and young builders want to rebuild the physical foundations of the modern economy. They bring brains, audacity, and an AI native intuition for where the world is heading.

The teams that win, though, will pair that first principles ambition with seasoned builders who know how to ship silicon, scale systems, and execute in the real world.

Since 2023, we have taken a high conviction approach to investing behind this view. We have backed the teams at Etched, Atero (acquired by Crusoe), Haiqu, and several more in stealth across memory, alternative computing, and other layers of the stack.

Compute is the limiting factor in a world of accelerating intelligence. The token exponential shows no signs of slowing, and the resulting pressure continues to expose structural cracks that incumbents alone cannot repair. History suggests that moments like this create rare openings for new entrants.

If you are building in compute, now is the moment. We want to meet you early, and partner with you as you shape what comes next.

.avif)