Primary Thoughts

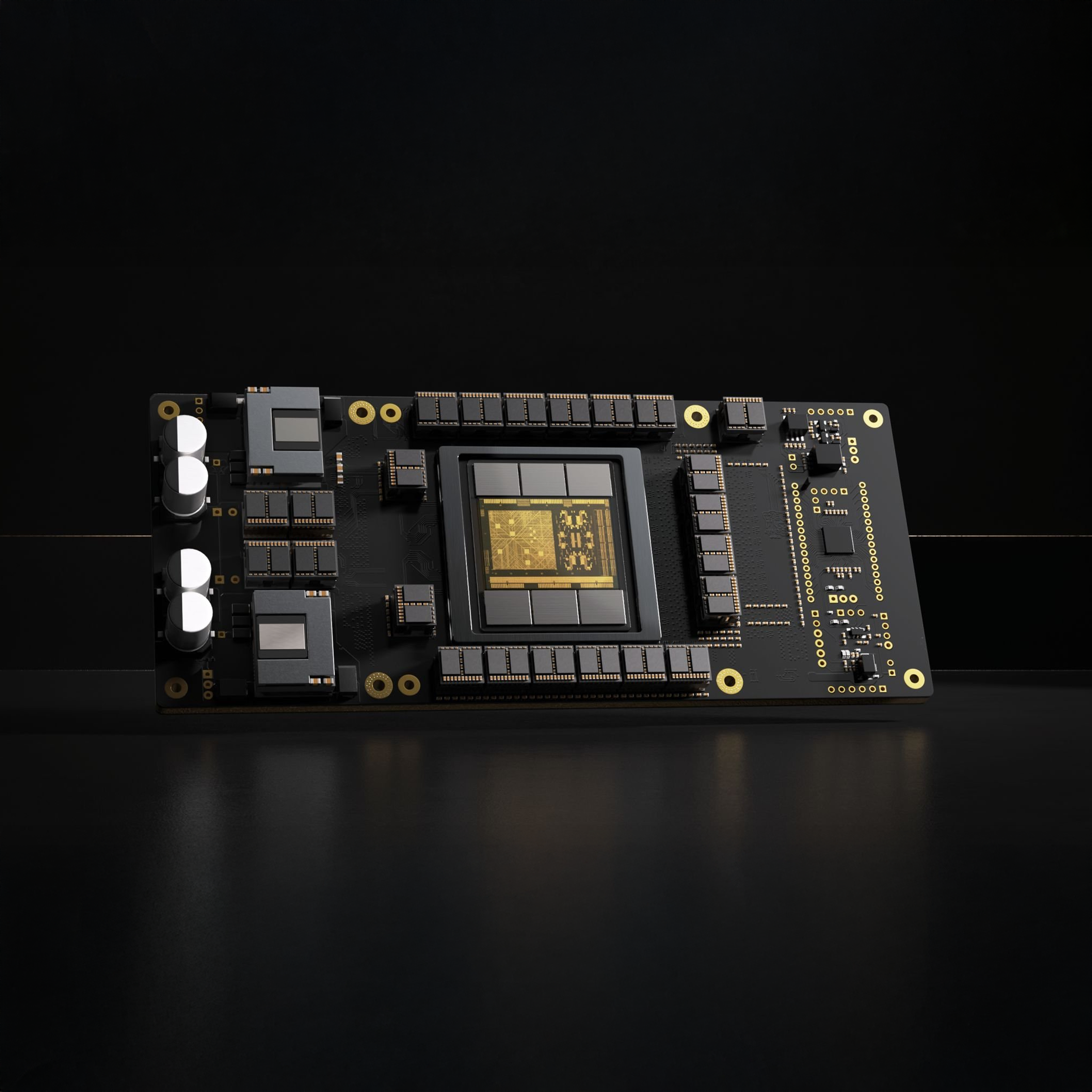

Etched’s Series A to revolutionize AI hardware with purpose-built LLM chips

"Move fast. Speed is one of your main advantages over large competitors."

This quote from Sam Altman encouraged us to venture into the daunting battlefield that is the semiconductor industry and lead Etched’s seed round about 15 months ago.

And it is speed of execution that gives us confidence in the future of superintelligence that Etched will enable. Indeed, we believe they will set a world record for fastest time to tape out for such a complex chip. This is fitting for a company building a chip that will be orders of magnitude faster than NVIDIA’s latest GPU, when running inference on transformer models.

Today, Etched is announcing a $120 million Series A to bring the same vision they pitched over a year ago to reality.

Hardware, not software, is the biggest bottleneck to truly magical AI experiences: artistic masterpieces like the next Titanic or Beethoven’s 9th produced by AI; agents that perform tasks on the web at the speed of thought, planning and booking honeymoons and preparing memos. What is impossible today will be possible tomorrow, but only with better hardware. To understand the Etched thesis, we encourage you to check out a post from the team at Etched discussing their bet.

The Etched team epitomizes the greatness of Silicon Valley, right down to the 'silicon.'

The CEO, Gavin Uberti, is brilliant, mature beyond his years, visionary, committed to the craft of being a founder, obsessed with the details, and insanely passionate. We are believers in him, and think that what Sam Altman is to software in AI, Gavin will be to hardware. You can listen to his podcast on Invest Like the Best with Patrick O’Shaughnessy, whose firm Positive Sum is co-leading this round with us, here. Gavin is joined by his cofounders, Robert Wachen and Chris Zhu, both equally remarkable in their own rights. This year, they became one of the first teams to collectively receive the Thiel Fellowship.

The founders are joined by a powerhouse roster of semis professionals who are all driven to break the rules while leveraging their expertise. Mark Ross, the CTO, was previously the CTO at Cypress Semiconductor, which eventually sold in 2019 for almost $10 billion. Ajat Hukkoo, the VP of Hardware Engineering, was at Intel for nine years and Broadcom for 14 before joining Etched. Saptadeep Pal, the Chief Architect, cofounded Auradine. The bench at Etched goes deep and every single person on the team is driven by an ethos of speed.

Most silicon teams are composed of people from the same networks and companies. Etched is the opposite of that: people with different skills and perspectives who want to be part of something special and change the world. It’s a team that embodies the creativity and optimism that makes startups exciting—a group of engineers, coming together with intense, uncanny ambition to build something that industry insiders believe is impossible. This is the only way that radical progress ever happens. This is how we get to superintelligence.

Today, more than anything, we’re proud of the team at Etched and humbled to be a part of their journey.

We’re also excited to be collaborating with such an excellent group of investors, including not just Positive Sum, but also Hummingbird, Two Sigma, Skybox Data Centers, Fundomo, and Oceans as well as angels like Peter Thiel, Thomas Dohmke, Amjad Masad, Jason Warner, and Kyle Vogt.

Interested in joining this stellar team? Check out the more than a dozen open roles here and help build the future of superintelligence.

.avif)

Why we doubled down on Inspiren

Senior living communities are at the heart of one of the most important demographic shifts of our time. An aging population, rising care costs, and persistent staffing shortages are forcing operators to do more with less—without compromising safety, dignity, or quality of life for residents. Yet most of the tools available today are point solutions: basic fall detectors, clunky emergency call buttons, or fragmented EHR systems that fail to keep pace with day-to-day realities.

Inspiren is changing that.

Their AI-powered hardware and software integrates real-time motion awareness, staff coordination, emergency response, and resident engagement into one cohesive ecosystem. From preventing falls to accelerating emergency response to improving care planning, Inspiren is redefining how senior living communities operate—while delivering measurable ROI to operators.

From Fall Prevention to Full-Stack Care Coordination

When we led Inspiren’s Seed round, the company had already built a best-in-class fall detection device. But CEO, Alex Hejnosz, and founder, Michael Wang, were thinking much bigger. They envisioned a full “intelligent ecosystem” for senior living, one that combined multiple hardware devices with a single software brain to give operators unprecedented visibility and control.

That vision is now a reality. In the past year, Inspiren has expanded from its core device to a comprehensive hardware and software product suite:

- AUGi for in-room activity sensing and fall detection

- Sense for high-risk bathroom coverage

- Staff Beacon for staff location and workflow optimization

- Help Button for residents to request help without wearing a pendant

- Inspiren HQ and Inspiren Mobile App, an AI software suite to give operators and staff real-time insights into the overall health of their communities

This unified approach does more than replace outdated systems—it collapses the need for multiple vendors into one platform, eliminating integration headaches and increasing staff efficiency. Dedicated clinicians partner closely with communities, ensuring clinical insights are communicated and applied in ways that maximize care quality, outcomes, and staff support. And, most importantly, keeps residents safer.

Why Now

The timing for Inspiren couldn’t be better. Over 10,000 people turn 65 every day in the United States. The senior living market is fragmented, underserved, and under increasing operational pressure. Regulatory scrutiny around resident safety is increasing, while financial risk from early move-outs and under-documented care is pushing operators to modernize.

At the same time, Inspiren’s combination of AI-powered sensing and integrated software is hitting an inflection point in cost and capability:

- Affordable hardware means mass deployment is now possible.

- AI-powered embedded workflows make adoption easy and ROI immediate.

- Regulatory and operational tailwinds are pushing toward data-driven, documented care.

In a market where speed of deployment is critical, Inspiren’s ability to get devices “on walls” faster than anyone else is a decisive advantage.

Why We Backed Inspiren—Again

We’re doubling down in Inspiren’s $100M Series B led by Insight Partners because we believe they are building the category-defining operating system for senior living. Our conviction comes down to four key beliefs:

- The market is ripe for a platform that replaces fragmented point solutions with an integrated, AI-driven ecosystem.

- Inspiren has proven product-market fit with industry-leading retention, rapid ACV expansion, and clear ROI for operators.

- The economics work at scale, with a recurring software model, short payback periods, and a massive market opportunity.

- This team can execute, having consistently delivered growth and product velocity ahead of plan.

As the aging population grows and care demands intensify, senior living communities will need more than incremental tools. They’ll need a system that makes care safer, faster, and more efficient. We believe Inspiren is poised as the market leader in this space.

We’re proud to continue our partnership with Alex, Mike, and the entire Inspiren team. The future of senior living is intelligent, connected, and compassionate — and Inspiren leading that future.

.avif)

AI-powered design review catching errors pre-construction

Bad design is one of the most persistent, overlooked, and expensive problems in construction. Change orders caused by coordination issues, missed specs, or incompatible designs aren’t just costly—they’re preventable.

In the U.S., hundreds of billions of dollars are spent on construction every year. Projects are chronically delayed and over budget, and with interest rates where they are, new development has become even more difficult in many markets. The majority of change orders could be caught in the pre-construction phase, but today’s review process is slow, expensive, and imperfect.

Large developers often outsource drawing reviews to third-party firms, paying hundreds of thousands of dollars for reports that take 6–20 weeks to deliver—and still contain errors. These delays and oversights ripple through every stakeholder, from architects to subcontractors, costing valuable time and eroding margins.

LightTable solves this. By applying AI-powered coordination and peer review, they can eliminate up to two-thirds, improving project IRRs by 3–4 points. For real estate owners, this means delivering projects faster, on budget, and with fewer costly surprises. For architects and subcontractors, it means less time revisiting old projects to fix preventable mistakes.

The Right Wedge: Peer Review

We backed LightTable in 2024 based on a simple premise: peer review today is manual, expensive, and error-prone—and AI can do it better. Starting with coordination issues across disciplines creates a powerful wedge to expand into broader design optimization and collaboration.

Three things gave us conviction:

- Acute pain: Developers feel the cost and time impact most directly.

- Adoption leverage: They can push better tooling across architects and engineers.

- Workflow ownership: Controlling the design review process opens the door to downstream automation, from value engineering to inventory-aware specs.

Since funding in November, LightTable has signed product development and design partnerships or pilots with five of the top ten developers in the U.S., including Hines, The Related Group, Greystar, Mill Creek, and Alliance. These pilots are already driving real-world feedback and product iteration, setting the stage for enterprise contracts with annual values that could reach seven figures.

Built With—and For—the Industry’s Best

LightTable’s go-to-market strategy is rooted in deep collaboration. Through these design partnerships, the team is running real projects and building in lockstep with enterprise users.

Paul Zeckser, Dan Becker, and Ben Waters didn’t just set out to build better design tools—they’re rethinking how buildings get designed from the ground up.

Paul, a product-first leader with sharp commercial instincts, spent over a decade shaping HomeAdvisor before leading product at Sealed. He’s known for turning big product visions into real market wins.

Dan, a seasoned AI and simulation engineer, founded a startup that was acquired by DataRobot and helped enterprise teams adopt AI. Frustrated by how slowly legacy players like Autodesk move, he decided to build LightTable: an AI-native company already shipping faster than most incumbents can prototype.

Ben, a trained architect that has worked at SOM and Gensler, knows and has lived the pain of coordinating large drawing sets, only to see RFIs and Change Orders pile up. He was convinced AI could transform this archaic practice of human-only review and deliver massive ROI for builders.

Together, they’re the team with the perfect set of backgrounds to build for speed—and for a smarter, more intuitive future in design.

Why We’re Excited

LightTable is starting where the pain is most acute—pre-construction peer review—and building a platform that could become the coordination layer for the entire industry. The combination of early traction with top developers, a pragmatic and valuable product, and a sharp, fast-moving team makes this exactly the kind of founder-led business we want to partner with.

We’re proud to be backing Paul, Dan, Ben,and the team as they redefine how better buildings get built, long before anyone breaks ground.

.png)

The gas turbine bottleneck reshaping energy infrastructure

Electricity costs are rising fast. One midstream energy CFO in Texas told us his company’s rates have climbed from $0.35/MWh in 2021 to nearly $0.70MWh today. “This is getting ridiculous,” he said. “We used to say the price at the pump decides elections. Going forward, it’s going to be the price at the meter.”

Similarly, an executive responsible for the data center buildout at Microsoft asks, “If every producible watt is already committed for the next five years, where can I get more watts?”

That question has become the defining obsession of this moment. AI companies, hyperscalers, and industrial manufacturers are all competing for the same scarce resource: firm, dispatchable electricity. Yet, turbines, the single most important piece of equipment required to make that power, are suddenly the most scarce.

There are effectively only three relevant manufacturers that can produce large-scale gas turbines: GE Vernova ($162B market cap), Siemens Energy ($91B), and Mitsubishi Heavy Industries ($84B). These machines sit at the center of the modern power plant. They are the hardware that converts fuel into motion and motion into electrons. Today, they are booked solid for years. GE, Siemens, and Mitsubishi are each reporting order backlogs that stretch roughly five years, with many delivery slots already sold into the next decade.

Rumors are circulating in Washington about just how acute this has become. A contact close to the Department of Energy’s new venture fund (a kind of “In-Q-Tel for energy”) told us the administration is even considering whether to pressure allies with outstanding GE turbine orders to release them back to U.S. buyers. It sounds extreme, but that’s the level of urgency building around this bottleneck.

What a Gas Turbine Actually Is

A gas turbine is, at its core, an air compressor, a combustion chamber, and a spinning shaft. Air is compressed, mixed with fuel (usually natural gas) and ignited. The expanding gases turn the turbine blades, which drive a generator to produce electricity. In a simple-cycle setup, that’s the whole process. In a combined-cycle plant, the hot exhaust is captured to make steam and power a second turbine, pushing overall efficiency and reducing emissions.

These are enormous machines. A single heavy-duty turbine can weigh more than 400 tons, stretch nearly 50 feet long, and generate anywhere from 100 to 400 megawatts, enough to power a mid-sized city. Smaller aeroderivative models, adapted from jet engines, are used for distributed or peaking power. Each large turbine costs roughly $50-100M depending on its class and configuration. It is elegant, brutally engineered machinery, and the workhorse of global baseload power.

How We Got Here

The roots of the shortage go back two decades. In the early 2000s, gas plants were being built everywhere. Then the 2008 crash hit, followed by another glut in the mid-2010s. Orders evaporated. OEMs shut factories, laid off skilled labor, and merged their supplier bases. The “painful overbuild” became a corporate cautionary tale as the turbine market crashed in 2018.

By the late 2010s, renewables dominated investor attention. Gas turbine divisions were treated as cash cows, not growth engines. Capacity stayed flat even as demand for electricity quietly began to climb again. Then came AI.

Starting in 2023, data-center power requirements exploded. Hyperscalers that once drew tens of megawatts suddenly needed hundreds. Natural gas looked like the fastest, most practical bridge to new capacity, but the world only had three major manufacturers left, and none had invested to scale. With the fresh memory of 2018 and some uncertainty about how much energy the AI buildout would actually require, they weren’t rushing to ramp capacity again.

Siemens Energy now reports a €136 billion backlog, the largest in its history. GE Vernova has roughly 55 gigawatts of gas orders in its queue and plans to expand from about fifty to eighty heavy-duty units a year by 2026. Mitsubishi Power says it will double production, but is already sold out into 2028. Even if all three deliver on their expansion plans, total output might rise only twenty to twenty-five percent—nowhere near enough to meet demand.

Anatomy of a Shortage

Every layer of the supply chain is fragile. The high-temperature blades and vanes inside the turbine are cast from exotic nickel alloys by a few companies such as Howmet Aerospace ($76B market cap) and Precision Castparts (acquired by Berkshire Hathaway for $37B). The massive forged rotors that hold everything together are produced by a handful of plants worldwide, including Japan Steel Works. The heat-recovery steam generators that make combined-cycle plants efficient are backlogged. Transformers, switchgear, and control systems are just as scarce.

The result is a perfect storm of concentration and pricing power. One energy CFO told us that total project capex costs have doubled in just fifteen months, from roughly $1,000/kWh to more than $2,000/kWh. “It’s cartel pricing,” he said. “There’s nowhere else to go.” Reuters now cites installed combined cycle gas turbine costs moving from ~$1,000/kW to $2,000–$2,500/kW on recent projects, driven by turbine scarcity, HRSG/transformer backlogs, and EPC/labor constraints. Some developers are scouring Alberta and the Gulf Coast for refurbished turbines from the 1990s because new ones are unavailable. Others, including major industrial conglomerates, are buying units on spec simply to guarantee they have equipment for future plants. Koch Industries is rumored to be doing the same.

Implications for the AI Buildout

Natural gas was supposed to be the fast path. Today, its build timelines look a lot like those of emerging nuclear small-modular-reactor projects. It’s no surprise that Microsoft, Google, and others are signing enormous power-purchase agreements with nuclear and geothermal developers such as Oklo, Kairos, TerraPower, and Fervo.

A chief strategy officer at one hyperscaler told us he’s signing these LOIs freely, while assuming most will never reach operation. Even among those that do, he expects only a fraction of the current terms to survive to commissioning. But that hardly matters. For hyperscalers, the act of signing unlocks investment flows, de-risks sites, and signals seriousness to regulators. It’s a rational strategy in an irrationally tight market: spray capital at every potential source of electrons, then back the few that deliver.

Our Perspective

The turbine shortage is not just an isolated equipment problem. It exposes the fragility of the entire industrial stack: the transformers, EPC labor, HRSGs, and switchgear that all depend on long, brittle supply chains. Turbines just happen to be where the pressure shows first.

For startups, there are enormous opportunities across this landscape. Predictive-maintenance networks for turbine fleets. Software that helps EPCs and utilities coordinate procurement. Financing models that let developers pre-buy critical equipment. Materials or manufacturing breakthroughs that reduce lead times. Even the idea of a fuel-agnostic turbine that runs on gas today and integrates with a nuclear reactor tomorrow feels less like science fiction than a commercial inevitability.

Still, solving this likely won’t be as simple as building another “Anduril for turbines” (though we’d entertain it). Heavy industrial capacity takes years, patient capital, and government partnership to stand up. The payoff is profound. Whoever helps unjam these bottlenecks will sit at the center of the next trillion-dollar buildout and power the world’s compute.

We’ll continue exploring these supply-chain chokepoints in future issues, from turbines to transformers to the EPC networks that knit them together. As always, we’re early in our journey into energy and learning in public. If you’re building, financing, or operating anywhere in this bottleneck, whether you make turbines, HRSGs, or just want to power the next wave of AI, we’d love to hear from you.

Why We Invested in Lyric—Again

Supply chains weren’t built for the world we live in now.

Pandemics, geopolitical risk, climate shocks, volatile demand, and an AI-fueled productivity race have put operators under more pressure than ever. But while everything around them is changing, the software they rely on hasn’t kept up.

Legacy supply chain tools like SAP, Blue Yonder, and Coupa were built for static environments. They offer rigid templates, brittle implementations, and expensive services arms that struggle to adapt to real-world variability. Even Gen 2 “smart” platforms like Palantir lean heavily on forward-deployed engineers to tailor models to each customer, resulting in long timelines, high cost of ownership, and limited reach.

In a world where operational agility is the difference between growth and chaos, the software that powers our supply chains can’t be static. It has to be composable, context-aware, user-extendable and fast.

In 2023, we led the seed round in Lyric because we believed the services-heavy playbook for enterprise software was breaking down, especially in supply chain. We saw a world where bespoke AI tooling was finally colliding with business reality: long-tail complexity, fragmented data, and a crippling reliance on forward-deployed engineers. Lyric offered a new path: a composable platform that could absorb complexity without defaulting to custom work. We were honored that a sophisticated repeat founder like Ganesh Ramakrishna opted to partner with us on what we do best: seed-specific operational partnership.

Today, we’re proud to double down in Lyric’s $44 million Series B alongside Insight Partners, VMG Catalyst, Permanent Capital, and other insiders.

Why? Because Lyric isn’t just delivering on the original thesis—it’s blowing past it.

The Product Problem Nobody Solved

Ganesh Ramakrishna knows this world inside and out.

Before Lyric, he built Opex Analytics, a services business that delivered custom data applications to Fortune 500 supply chains. But every win came at a cost: each solution was a one-off. The team shipped bespoke code for every engagement.

That’s the problem with most enterprise AI today. It generates project-level IP useful for one customer, once. Not product-level IP that scales across use cases and companies.

Ganesh started Lyric to change that.

Lyric is a composable, AI-powered platform that turns operational complexity into decision intelligence. The platform layers domain-aware data infrastructure, a rich library of optimization and ML models, a no-code sequence builder, and intuitive UIs, all wrapped in agentic AI tools that accelerate development and deployment.

It doesn’t just solve problems. It productizes the solutions.

From Six Months to Six Hours

What does this mean in practice?

At a major beverage company, Lyric started with just two use cases: out-of-stock forensics and production planning. These weren’t problems the customer’s existing stack (Palantir, Blue Yonder, Oracle) could solve. But Lyric could. The first few apps saved $8 million in value. Soon the customer had built 20+ apps on their own with no consultants involved. Today, Lyric powers more than $40 million in ongoing ROI inside that enterprise.

At another global CPG, Lyric replaced a $2 million, six-month failed implementation with a working solution in four weeks. That app saved $1 million per quarter and led to a wave of expansion across planning, logistics, and risk analytics.

The POC Factory deserves special mention. Lyric can go from raw data to a full-featured production-grade application in as little as six hours. Customers aren’t pitched with decks or mockups. Instead, they’re handed a live app tailored to their business. That experience doesn’t just accelerate sales. It sets a new bar for customer expectation.

It’s no surprise that Lyric’s go-to-market motion, which until recently was entirely founder-led, has scaled with remarkable efficiency and unprecedented ACV expansion.

The Platform Is the Product

Lyric’s true innovation is about speed and scale. Most enterprise AI platforms still operate like bespoke consultancies. They promise flexibility, but that flexibility is serviced, not shipped. Lyric’s composable architecture flips that model. It allows customers to build once and scale infinitely. Applications are modular, models are extensible, and integrations are already in place across the dominant enterprise data and planning systems.

What we’re seeing now is the platform unfolding across use cases far beyond what it was originally built for. Customers who began with core supply chain planning and network design are now solving for fleet and workforce optimization, predictive maintenance, sustainability forecasting, warehouse layout, and more. These weren’t on the roadmap a year ago, but because Lyric’s platform is composable by design, these apps aren’t exceptions. They’re natural extensions.

This is how platforms grow. Not by narrowly optimizing a single workflow, but by adapting to the evolving needs of the enterprise. Lyric isn’t just flexible software. It’s infrastructure for operational intelligence.

What’s Next

Lyric now has the team, product, and momentum to scale into the broader vision it was always meant to fulfill. With seasoned GTM leadership in seat, the company is ready to go from founder-led selling to full GTM scale. The POC Factory remains a superpower, dramatically shortening the distance between interest and impact, and will continue to be a cornerstone of the buyer experience.

Under the hood, Lyric is pushing aggressively into agentic AI. New copilots are being trained to help customers test scenarios, build workflows, and deploy models even faster. As the product expands, so does the community: more customers are building their own apps, repurposing existing ones, and contributing to a growing library of reusable solutions.

Most importantly, Lyric’s market is expanding. What began as a bet on supply chain planning is now becoming a horizontal platform for any operationally complex, data-rich decision in the enterprise. In a world where cost structures are shifting, AI tooling is democratizing, and operational resilience is a board-level priority, we believe Lyric has the chance to define a new category.

Lyric isn’t just rewriting how enterprise software is built. It’s redefining how real-world decisions get made. We’re proud to be along for the ride—again.

Maybern replaces spreadsheets running billion-dollar portfolios

More and more money continues to flow into the private markets. Today, private funds manage over $13T and continue to grow rapidly—averaging 20% annually since 2018. This explosive growth reflects a fundamental shift in how institutional and private capital is being deployed, with private market capital becoming an increasingly critical pillar of our global financial systems.

As funds grow, so does complexity. The bespoke nature of how deals are structured—both for individual investments as well as agreements with limited partners—means it historically has taken an army of accountants and excel spreadsheets to manage. Fund CFOs are faced with two not-so-great options:

- Try to pay top dollar to grow a large team in-house in the midst of a massive accounting talent shortage and bear all of the associated costs.

- Pay a third-party fund administrator like SS&C ($18 billion market cap) or SEI ($9 billion market cap) 6 or 7 figures annually. and then run a shadow system to double check everything in-house).

The challenges facing fund CFOs today are more acute than ever before. When we spoke to 20+ fund CFOs ranging from $1B first time fund managers to the most sophisticated finance teams with hundreds of billions under management, we heard the same pain points over and over again. Dissatisfaction with fund admins. Poor data quality and access. Lack of visibility into how things are calculated. Multi-day long turnaround times on simple questions. Frequent mistakes. High staff turnover.

All this against a backdrop where pressure is mounting on both sides. On one hand, their Managing Partners are pushing them to be more strategic—i.e. evolve beyond the operations of running the back office and deliver insights on portfolio construction and management, cost optimization, and fundraising strategy, and deliver better insights, more quickly. On the other side, LPs and internal IR teams are asking for faster, more in-depth, and more frequent reporting—leading to a constant time-suck of information retrieval and bespoke analyses.

This shift from operational CFO to strategic CFO is one that we’ve seen play out in the corporate CFO tooling world where software has emerged to streamline and automate day-to-day operational work of finance teams to allow time to be freed up to focus on strategic decision making. Many large winners have emerged from this trend including Anaplan, Blackline, Workiva, and OneStream, just to name a few. Private fund accounting however isn’t governed by standardized GAAP principles. Instead, they’re governed by their limited partnership agreements (LPAs) which not only can take many shapes and forms but are also oftentimes accompanied by negotiated side letters with preferential terms for certain investors. This complexity meant that previous attempts to build software solutions for this problem were either limited to much simpler use cases (e.g. small venture funds) or requiring tons of customization with multi-year implementation timelines.

Enter Maybern

Maybern is revolutionizing private funds with the first true operating system for fund finance. Purpose-built in partnership with the most complex funds managing billions in AUM, Maybern's core product centralizes and automates essential operations, saving finance teams hours of headache. More importantly however, the software becomes the single source of truth for all fund flows regardless of the complexity of the underlying legal structure. The core engine, designed for maximum flexibility, adapts to each fund's unique structures and workflows, handling even the most complex edge cases.

What makes Maybern particularly special was the decision to start with real estate private equity—arguably the most complex segment of the market. By solving for the most challenging use cases first, they've built an incredibly robust and flexible platform that can handle virtually any fund structure or requirement without writing a single line of custom code. They’ve quickly leveraged this advantage to win the hearts and minds of CFOs across traditional private equity, growth equity, and private credit.

There is no team that is better suited to tackle this problem. The Maybern cofounders Ross Mechanic and Ashwin Raghu initially met at Cadre, a tech-enabled private equity platform for individual investors. There, they built the internal system that allowed Cadre to multiply AUM without needing to scale headcount. Upon our first meeting with Ross, it was clear that he was deeply obsessed with this problem and determined to change this market. Although an engineer by training, he’d both personally read hundreds of LPAs and set out to learn everything there was to know about fund finance before coming to realize that it was possible to build software that could address all the edge cases and complexities, it just wouldn’t be easy. Since then, Ross and Ashwin have assembled a team of world-class engineers and fund finance specialists to tackle a problem many thought was unsolvable.

When we spoke to early customers, it became clear that Maybern would fundamentally transform how funds are run. Fund CFOs are very rarely effusive, so hearing effusive praise of the solution’s immediate transformation of internal operations and the deep expertise and credibility of the team suggested that this was clearly something special. With an internal buyer in house, it only made sense for us to put Maybern in front of our CFO, MIke Witowski, who previously had spent time at one of the largest fund admin providers. In the span of one meeting, Ross and Ashwin managed to transform his wary skepticism into astonished enthusiasm. It was at that moment that we knew this was something special.

As the private markets just get bigger and more complex, Maybern is at the center building a true enterprise grade central nervous system for fund operations. Soon, the most sophisticated private funds will run on Maybern. We’re thrilled to be leading the Series A and to be partnering with the team on this journey.

The rev ops platform built for modern finance

Revenue collection is the biggest opportunity in the CFO stack, and Tabs is poised to own it. CEO and Co-Founder Ali Hussain is fierce in all the right ways: urgent and thoughtful, yet a true servant leader. Alongside co-founders Deepak Bapat and Rebecca Schwartz, and an extraordinary team of passionate builders, Tabs is creating one of the most promising vertical-AI companies in New York City.

Today, we’re excited to announce our continued support of Tabs, the AI-native revenue platform for modern finance teams, as a part of its $55 million Series B led by Lightspeed Venture Partners. Tabs is automating the revenue engine for the AI-Native CFO, powering fast growing companies like Cursor, Statsig, and Cortex.

Accounts receivable, the all-important task of collecting revenue, is breaking free from the ERP. There are incredible decacorns associated with every layer of the CFO stack—Ramp for spend, Rippling for payroll, Bill.com for AP—but no company owns AR. The ERP is unbundling as enterprises are modernizing, with 50% of CIOs expecting to upgrade their ERP in the next two years. The ERP historically dominated the CFO stack, but lengthy implementations, expensive pricing, brittle architecture, and antiquated product experiences created an opening to build best-in-breed solutions for each category.

We believe revenue is the biggest prize in this unbundling. Revenue collection sees the biggest flow of money. It commands the most time from experienced, senior members of your team. Given the complexity and criticality of revenue, the market opportunity for Tabs swells to over $1 trillion given in senior labor spend required to manage revenue and billing operations at companies of all sizes.

Tabs transforms AR from a manual, ops-heavy burden into an automated revenue engine. By making contracts—not CRMs or spreadsheets—the system of record, Tabs enables CFOs to say “yes” to growth, experimentation, and new monetization models without getting trapped in their legacy ERP.

The Finance Stack Wasn’t Built for the AI Era

Accounts receivable has historically been a burden finance teams haven’t been able to engineer themselves away from. Legacy approaches to AR force companies to map CRM and billing data through tools like NetSuite or Stripe, creating endless edge cases. Contractual deviations—discounts, custom usage, multi-year contracts—becomes a painful manual exercise, ballooning the size of finance ops teams. It could take three days to reconcile contracts in an Excel doc with what’s in your CRM, manually cross-reference materials, feed that data back into your ERP, then automate a billing flow. The painful result is delayed collection of revenue.

AI has created an incredible opportunity to transform AR, improving accuracy on contract parsing, reducing time spent on reconciliation, and making the platform flexible to new pricing models. AI is the unlock that enables Tabs to convert a contract into cash. By treating the contract—the same artifact auditors already rely on—as the source of truth, Tabs eliminates manual reconciliation, using AI to collect unstructured contracts and automatically turn it into revenue.

Pricing innovation makes this shift even more urgent. AI-native companies are experimenting with consumption billing, usage-based pricing, and hybrid models at a pace legacy systems can’t support. Even if you solved the data mapping problem, introducing a new pricing model renders it useless in moments. Traditionally, this made the CFO the brake on sales creativity, prioritizing revenue clarity over pricing innovation. Tabs changes that: finance becomes a team of “yes,” with revenue systems that adapt as quickly as sales. For CFOs navigating the AI era, this flexibility is crucial to keeping up, as every company knows its pricing will evolve, and AR must keep pace.

The result with Tabs is faster closes, leaner teams, and higher confidence for both finance and auditors.

Contract-to-cash and beyond

Tabs is built for this moment. Its wedge is contract-to-cash automation: ingesting messy contracts, extracting commercial terms, generating invoices, chasing payments, and recognizing revenue with minimal human involvement. Tabs’ ingestion engine transforms static PDFs into bill-ready logic, while Slack-native workflows and API-first integrations embed directly into how finance teams already operate. Tabs isn’t just digitizing invoicing—it’s reimagining the entire revenue cycle.

Billing and revenue often require senior team members tackling mundane tasks. Their work also exists across many solutions, referencing the contracts, CRM, ERP, payments processor, and other systems to recognize revenue. This cross-functional workflow that requires domain expertise makes it perfect for agentic automation, freeing up teams to do higher value work. Tabs makes it possible to automate everything from edge cases and revenue rules that used to require armies of finance professionals.

CEO Ali and the Tabs team believe the future of finance looks like this: lean senior teams powered by swarms of AI agents managing revenue at scale. From your first dollar to your billionth, the platform automates billing, collections, revenue recognition, audit, and reporting – with an auditable trail across the CFO stack.

That vision unlocks more than AR. With the launch of Tabs Agent, its Slack-native AR agent, Tabs is taking the first step toward building a true agentic revenue platform.

Why we invested

Since incubating Tabs, Ali and his team have blown past every target, quarter after quarter, while shipping products at remarkable speed. With $55 million in fresh funding led by Lightspeed, Tabs is positioned to lead the shift away from ERP-bound finance and toward an AI-native, best-in-breed revenue platform.

At Primary, we believe Tabs will be one of the defining companies of the AI era—a generational compounder that fundamentally reshapes the economics of finance. That’s why we’re proud to deepen our partnership in this $55 million Series B. Tabs isn’t simply automating AR—it’s creating the revenue operating system for the AI era, unlocking a trillion-dollar labor market and turning CFOs from bottlenecks into accelerators. We’re proud to back Ali, Deepak, Rebecca, and the entire Tabs team again for this next phase of their product.

Tabs was incubated at Primary Labs. If you’re interested in learning more about incubations, becoming an Operator-in-Residence, and building with us, please reach out to labs@primary.vc.

The $30M bet on AI-powered go-to-market execution

Today’s “modern” go-to-market motion often feels anything but modern because it is fundamentally broken for both sellers and buyers. Commercial teams struggle to navigate growth and efficiency trade-offs, and buyers invariably feel those trade-off tensions in underwhelming customer experiences. While AI promises transformation, to date it has largely delivered only incrementality. Buyers and sellers are drowning in point solution experiments that don’t materially move the needle. Nobody wins.

Growth commands a premium over profitability even in the tightest market conditions, so SaaS companies are always charging hard at top-line ARR growth, even if that means sacrificing quality and efficiency along the way. Everyone loves to talk about the eye-popping growth of the AI darlings, but we live in a tale of two cities and in the non-AI-native world where top-line ARR growth has been compressed, companies have been forced to pay more attention to their bottom lines, meaning all eyes are on efficiency. Metrics such as “APE” (ARR/FTE) are the talk of the town.

More often than not, getting more efficient means cutting costs. But the reality for sales-led businesses is that 50-80% of their sales and marketing burn is headcount, so when they push to make teams more efficient, it’s usually the buyer who suffers: slower response times, reduced access to answers until they can prove they are fully “qualified,” and so forth. Buyers are consistently underwhelmed and frustrated when evaluating new solutions because the process is so disjointed for them: schedule a meeting with a BDR only to get minimal information while they evaluate you, wait an entire day for an account executive to sync with a solutions consultant on technical questions before circling back, etc. As the old sales adage goes, “time kills all deals,” and many deals are lost before a qualified lead is ever even logged in the system purely on account of the bumpy buyer experience.

Even high-growth or mature sales teams with plenty of resources haven’t delivered much better for their buyers. The larger commercial teams become, the wider the distribution in team performance and quality, thus weakening the efficiency of the overall funnel and rate-limiting growth (while still disappointing the end customer).

The product-led growth (“PLG”) movement proved that it is possible to operate in a leaner capacity while still delighting customers, but “product-led” was the operative part of that equation. We believe that AI-led growth will democratize this potential to every shape, flavor and size of company in a way that benefits buyers and sellers alike. This is why we’re excited to introduce 1mind and announce their $40M in funding following their recent $30M Series A led by Battery Ventures. We were privileged to lead 1mind’s $10M seed round in the spring of 2024, and are excited to double-down with our support as 1mind enters their next chapter of growth.

Today, go-to-market (“GTM”) executives are inundated with pitches for AI point solutions and co-pilots. Siloed tools lack the efficacy of a horizontal solution (i.e. a sales-facing AI would be much more helpful to a prospect if it was also trained on customer support questions, internal knowledge bases, etc.) and broader, more horizontal LLMs don’t offer the level of accuracy CROs require. And at the end of the day, humans are inherently rate-limited in how fast they can read, digest and act on information provided by co-pilots. 1mind deploys human-like AI to GTM teams to augment and ultimately replace full-time employees. As Jacco van der Kooij wrote in a recent Winning by Design research paper, “the best use of AI is not to improve people's performance through co-pilots but to create disproportionate improvement by using AI to optimize the process entirely and replace the seller.” GTM teams are hungry for a consolidated approach purpose-built for them.

We aren’t talking about agents or AI wrappers. 1mind’s superhumans have faces, voices, personalities, knowledge, motivations and a GTM brain. They have their own AI-optimized virtual desktops that allow them to do anything a human can do on their computer, including joining impromptu video calls to give presentations or demos. 1mind promises the power of a salesforce that never sleeps, learns at lightning speed, and evolves continuously.

Amanda Kahlow, 1mind’s founder and CEO, was previously founder and CEO of 6sense, the pioneer of B2B account-based marketing and intent data. After taking some time off following that incredible journey, Amanda sprung back into action when she identified another category-creating opportunity to transform how B2B companies grow, through what she’s coined as AI-led growth, or AiLG. As Amanda regularly says, 6sense was to find buyers and 1mind is to close them. Sachin Bhat, Amanda’s right hand and 1mind’s CTO, is an experienced enterprise technologist and former founder who most recently spent 5 years scaling complex global engineering and data teams at Rippling.

We believe that Amanda’s reputation and reach make her uniquely suited to usher GTM teams into the next (AI-powered) era of growth and that the formidable commercial-technical duo of her and Sachin will build the definitive GTM platform and accelerate AI adoption across all flavors of commercial and customer teams.

Indeed, sales is just the beachhead: 1mind’s vision is to be all-knowing for an organization, with targeted use cases that run the gamut from top of funnel qualification all the way through to ongoing technical support. 1mind will become the brain, face, and voice of commercial and customer organizations. And as 1mind executes its horizontal, end-to-end play, we believe they will be well-positioned to box out other categories of software, including training and enablement software, internal knowledge management tools, demo automation software and more.

No one tells a company’s story better than its customers, and 1mind’s reviews are off the charts. To date the team has successfully partnered with incredible brands such as Hubspot, Nutanix, Owner, Seismic New Relic and Boston Dynamics, and 30% of customers expand to new use cases within 90 days of going live, and several of 1mind’s customers have joined the cap table.

AI-led growth is the future of go-to-market. There will always be things humans will do better than AI, but there are many things AI will consistently do better than humans, and we’re excited to live in a world where that is celebrated and encouraged. As Amanda says, “if you are worried about your AI hallucinating, have you asked yourself whether your sellers hallucinate?”

It's exhilarating to imagine SaaS companies no longer need to think about account prioritization or ticket deflection because AI does the heavy lifting for them, never mind the resulting implications for buyer satisfaction. If you’re a revenue leader committed to true transformation in your go-to-market organization, we encourage you to engage with 1mind’s superhuman (Mindy) today.

Scaling for the AI era: Fund V

Primary has, from our beginnings, never looked like a traditional VC firm. My co-founder Ben and I always liked it that way, and as we’ve scaled, we’ve leaned ever-harder into those differences. Today, I’m thrilled to share that we have taken the next step in scaling our firm and in breaking the norms of our industry as we announce our fifth set of funds. At $625M, this represents the largest standalone seed firm in the market and a ringing endorsement by our LPs of our ambition to build the biggest, most impactful, and best performing seed firm on the planet.

Today, Primary leads pre-seed and seed rounds across tech sectors, backing exceptional founders from San Francisco to Tel Aviv. We practice the craft of seed investing at institutional scale. On our way to 80 full time employees, we are led by a team of eight exceptional, sector-focused investing partners, each running their own strategies and partnering with the very best founders in their sectors: Financial Services, Healthcare, Vertical AI, Infrastructure/Compute, Cybersecurity, Consumer, GTM Tech, and the Industrial Evolution. Our partners bring deep insights, extensive networks, and curious, prepared minds to their craft.

Super-charging our investing work is an Impact team, led by a C-suite of seasoned operators, that delivers operational support unequaled at any stage of venture. And tightly aligned with both teams is PrimaryLabs, our incubation engine, which in recent years has launched many of the most exciting companies in our portfolio. In aggregate, Primary is a team with more scale, more muscle, and more ability to drive genuine results in support of founders than any in the market.

At 10x the size from our first fund, we are beyond what Ben and I imagined when we began working on Primary. We were never remotely interested in building a typical venture capital firm. We joined forces because we wanted to build something that changed the way the industry operated, putting the founder at the center. Our most important firm value has always been “Wear the Founder’s Shoes.” Guided by that, we listened to founders and invested in the capabilities they most valued across talent acquisition, revenue generation, and capital raising.

As our early investments began to bear fruit – 9 of the 25 investments in our first fund reached unicorn status and another two achieved cash exits north of $500M – our conviction grew. The unique way we made operating resources available to founders was making a difference. With conviction came ambition to push further, for we knew that our model would get even better with scale. As we’ve scaled, we’ve resisted the temptation that unfortunately defines our industry: using new management fee resources not to execute better for founders, but to further line the pockets of the very investors whose incentives now drive them away from seed and towards larger/later deals. Instead, we’ve grown our team and capabilities in line with the growth of our funds.

This year our team will make hundreds of hires, drive tens of millions of dollars of revenue pipeline, and support dozens of new financings for our companies. As we all race to find and earn the right to work with the founders who are driving the AI revolution, Primary is showing up with more capability and more expertise than ever. And at a time when many firms are struggling to raise capital, our existing LPs have doubled down on our strategy and several amazing, mission-driven institutions have joined us as LPs for the first time.

We are eleven years into an amazing journey at Primary. Every year we relearn the original lesson of venture: success is all about the founders with whom you partner. Our journey has been defined by a remarkable collection of founders and the successes they’ve delivered for our LPs. Our mission has been to support them with everything we’ve got. We feel truly blessed that the unconventional strategic bets we made early on have resonated with founders and paid off in earning us their partnership.

We sit today with a unique market position in the midst of what is by a vast margin the most exciting and important moment in the history of information technology. And we’re not stopping building. We never will. We’re rapidly reinventing everything about how we operate for an agentic future, building new products, new technologies, and new capabilities internally, all in service of continuing to offer something even better in support of world-class founders (more coming here soon).

We are eternally grateful to the LPs who have entrusted us with their capital and given us the right to continue to add our own dent to the universe in service of founders. Fund V is bigger than ever, and we fully expect it to be better, as well. We can’t wait to meet the founders defining a new generation of innovation and magic in our world.

.avif)

Our compute thesis: Etched and beyond

In January 2023, semiconductors were not the rage. NVIDIA was worth $360B. Memory was a sleepy commodity. Hyperscaler capex totaled $130B+, relatively flat from the year prior. Approximately 100k H100-equivalent compute systems were deployed globally, with few having ever heard the term “H100” before.

We were post the ChatGPT moment, but the gears of the semiconductor supercycle were only beginning to churn.

Today, NVIDIA is worth over $4.5T. Multi trillion parameter models demand staggering memory capabilities, driving sky high DRAM prices and scarce supply. Hyperscaler capex in 2025 surpassed $400B and is expected to grow to $600B in 2026. As of Q3 2025, more than 3 million H100 equivalent systems are training models and running inference. Executives now brag about their secured GPU shipments as they compete in an economy increasingly organized around AI.

Back to early 2023.

Our belief was that this was about to change. AI demand was set to explode, and the world did not yet have sufficient compute to meet the need. Scaling laws suggested that no amount of computation would ever truly be enough to deliver the magical experiences AI could unlock. A demand tsunami was coming.

We intuited that a window of opportunity was opening for entrants to capitalize on the moment. In February, I shared this view with the Wall Street Journal: “There’s new openings for attack and opportunity for those players because the types of chips that are going to most efficiently run these algorithms are different from a lot of what’s already out there.”

That same day, I met Gavin Uberti, the CEO and cofounder of Etched.

Gavin had pitch perfect understanding of what was about to happen: transformer dominance would expand; the GPU bottleneck would intensify; inference would become the all-important battleground; and the power of purpose-built hardware would become clear.

We led the Seed round in Etched in March 2023 and have since worked with Gavin and the team as they (as they like to say) build the hardware for superintelligence. This experience combined with studying the broader compute supercycle helped crystallize our conviction.

Our Compute Thesis

Our compute thesis is simple. Alpha exists at seed for compute investing. Every major technology shift requires a rebuild of its underlying infrastructure. As compute drives intelligence, demand for computation is growing exponentially, forcing a massive capex buildout across silicon, power, networking, and cooling. Fundamental bottlenecks are emerging. Incumbents are being pushed beyond incremental improvement. New opportunities are opening for founders taking first principles approaches to rearchitect how computation is produced and scaled. Yet the majority of seed investors shy away from compute deals.

We’ve noticed that fewer VCs spent time in the compute market, but wanted to quantify it. We analyzed the past decade of M&A across software infrastructure (defined as Dev Ops, Dev Tools, Data, etc) as compared to compute (semiconductor value chain). Since 2015, software infra saw 8,500 M&A transactions, while compute saw 1,300. Despite 15% the deal count, the resulting value creation in compute was $705B, compared to $587B in software infrastructure. It’s the power law on top of the power law – fewer opportunities but far bigger outcomes.

We believe our thesis will only grow in strength thanks to the token exponential. Consumer adoption is accelerating as models improve and new use cases emerge. AI is moving from occasional interaction to daily workflow. Swarms of agents are running continuously in parallel. Enterprise infrastructure is migrating from CPU-based machine learning to GPU-based LLMs. Beyond knowledge work, AI will expand into science, robotics, security, entertainment, and products we have not yet imagined.

The simplest evidence is personal. AI is not just pervasive in my life. My usage is accelerating rapidly. My wife and I just had our second baby; ChatGPT was around for the first but now Chat is like a doctor/therapist in our pocket. At Primary, Gaby and I are in deep debate with Claude around our compute thesis. The firm is restructuring our workflows around AI tools in real time. Our token usage today is easily a hundred, if not a thousand times, greater than a year ago at this time.

And underneath it all sits the same constraint: compute. So it should come as no surprise that we think Jensen is directionally correct when he suggests a $3 to $4 trillion buildout may arrive this decade alone.

The Bear Case

Of course not everyone is a Jensen bull. Michael Burry, the seer of the housing bubble, has $1.1 billion in short bets against NVIDIA and Palantir – and he's not alone. The bear case: infrastructure spend won't yield equivalent value. Anthropic and OpenAI will make over $60B in revenue, backed up by over $600B of hyperscaler capex. Google spent $24B in capex in Q3 2025, only to make $15B in their cloud business. Enterprise revenue is at risk – OpenAI, Anthropic, Google, Microsoft, and Palantir all want the entire enterprise wallet. Even early winners like ServiceNow are trading back at 2023 prices as the market sorts winners from losers.

Naysayers are pointing to signs of "bubble behavior": circular revenue deals where NVIDIA invests into OpenAI which buys NVIDIA hardware; depreciation concerns as chip cycles accelerate; unsustainable spending with OpenAI committing to nearly $1T in compute while burning $9B a year; and questionable valuations across asset classes, with sketchy pricing practices and stressed debt markets.

The behavior – irrational exuberance, circular revenue, fantastical projections – all just screams bubble. You can hear the echoes of the dot.com bubble when people speak of tokens as proof of value. Or the telecom overbuild. Or the 2008 financial crisis with the funky debt.

The lynchpin to the bull case is simple: AI needs to deliver value. We believe it will, and then some.

In Defense of the Bull Case

The historical differences that defend Jensen's bull case matter:

- 2001: Overbuilt fiber sat at 5% utilization. Today, every GPU yearns to run at capacity. Even a 1% increase in utilization can be a billion dollar revenue opportunity.

- 2008: Money flow built on unpayable consumer debt with no cash generation. Today, hyperscalers with $500B+ annual free cash flow invest in productive infrastructure. Meta, Microsoft, Google, Amazon, SpaceX are good debtors.

OpenAI went from $2B, to $6B, to $20B in revenue in the last three years. Anthropic went from $1B to $9B in 2025 and is on track for $30B this year. This is with only 10-20% global consumer usage, no ad monetization, and barely cracking the enterprise opportunity.

Circular revenue becomes problematic when no value is created. Nvidia invests in OpenAI and Coreweave, then sells them GPUs with usage guarantees. But this reflects genuine scarcity and real demand – Nvidia captures value at multiple points in an actually expanding market, not recycling dollars in a closed loop.

And perhaps most importantly, geopolitical competition removes the option to slow down. There's consensus we cannot cede AI leadership to China. That requires more compute.

An Unprecedented Opportunity for Value Creation

There maybe should be a rule in investing that you're not allowed to say, “this time things are different.” But ... the all important word in that sentence is “time.” We are undoubtedly in bubble territory. I got into tech in 2010 and, for 12 years, I heard talk of bubbles. And then the bubble burst. The value creation in that time was astronomical, and it will be de minimis compared to what is taking place now. Said another way, we believe years of demand, endless innovation, and cash-rich companies financing the build point to a fact that things are different this time, for now.

We believe the next phase of compute will require more than iteration. It will require step functions. In some cases, discontinuous jumps in architecture, efficiency, and system design that incumbents are not economically or culturally positioned to lead. In moments like this, the opportunities emerge not because incumbents are asleep, but because they are rationally bound to the status quo. In moments like this, the bottlenecks move faster than the incumbents.

The Founders Making Building the Future

Ultimately, this thesis is brought to life by founders ambitious enough to build where the stack is hardest. The ones we are most excited to back combine deep technical realism with a willingness to challenge what the system has accepted for decades.

A new generation is showing up. Semiconductor and computer architecture programs are surging, and young builders want to rebuild the physical foundations of the modern economy. They bring brains, audacity, and an AI native intuition for where the world is heading.

The teams that win, though, will pair that first principles ambition with seasoned builders who know how to ship silicon, scale systems, and execute in the real world.

Since 2023, we have taken a high conviction approach to investing behind this view. We have backed the teams at Etched, Atero (acquired by Crusoe), Haiqu, and several more in stealth across memory, alternative computing, and other layers of the stack.

Compute is the limiting factor in a world of accelerating intelligence. The token exponential shows no signs of slowing, and the resulting pressure continues to expose structural cracks that incumbents alone cannot repair. History suggests that moments like this create rare openings for new entrants.

If you are building in compute, now is the moment. We want to meet you early, and partner with you as you shape what comes next.